Merge #486: Add pippenger_wnaf for multi-multiplication

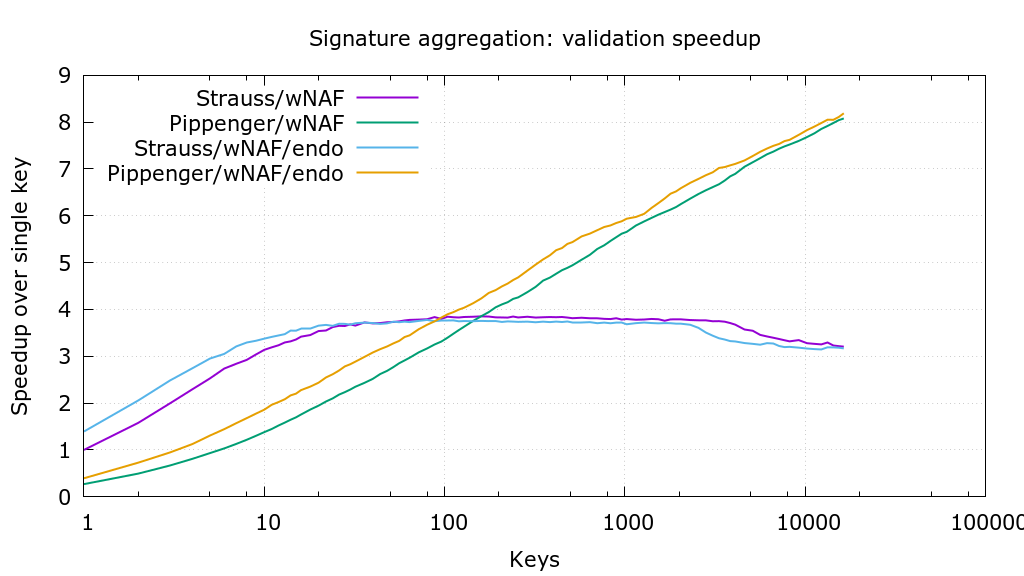

d2f9c6b Use more precise pippenger bucket windows (Jonas Nick) 4c950bb Save some additions per window in _pippenger_wnaf (Peter Dettman) a58f543 Add flags for choosing algorithm in ecmult_multi benchmark (Jonas Nick) 36b22c9 Use scratch space dependent batching in ecmult_multi (Jonas Nick) 355a38f Add pippenger_wnaf ecmult_multi (Jonas Nick) bc65aa7 Add bench_ecmult (Pieter Wuille) dba5471 Add ecmult_multi tests (Andrew Poelstra) 8c1c831 Generalize Strauss to support multiple points (Pieter Wuille) 548de42 add resizeable scratch space API (Andrew Poelstra) Pull request description: This PR is based on #473 and adds a variant of "Pippengers algorithm" (see [Bernstein et al., Faster batch forgery identification](https://eprint.iacr.org/2012/549.pdf), page 15 and https://github.com/scipr-lab/libff/pull/10) for point multi-multiplication that performs better with a large number of points than Strauss' algorithm.  Thanks to @sipa for providing `wnaf_fixed`, benchmarking, and the crucial suggestion to use affine addition. The PR also makes `ecmult_multi` decide which algorithm to use, based on the number of points and the available scratch space. For restricted scratch spaces this can be further optimized in the future (f.e. a 35kB scratch space allows batches of 11 points with strauss or 95 points with pippenger; choosing pippenger would be 5% faster). As soon as this PR has received some feedback I'll repeat the benchmarks to determine the optimal `pippenger_bucket_window` with the new benchmarking code in #473. Tree-SHA512: 8e155107a00d35f412300275803f912b1d228b7adff578bc4754c5b29641100b51b9d37f989316b636f7144e6b199febe7de302a44f498bbfd8d463bdbe31a5c

This commit is contained in:

commit

c77fc08597

@ -42,6 +42,8 @@ noinst_HEADERS += src/field_5x52_asm_impl.h

|

|||||||

noinst_HEADERS += src/java/org_bitcoin_NativeSecp256k1.h

|

noinst_HEADERS += src/java/org_bitcoin_NativeSecp256k1.h

|

||||||

noinst_HEADERS += src/java/org_bitcoin_Secp256k1Context.h

|

noinst_HEADERS += src/java/org_bitcoin_Secp256k1Context.h

|

||||||

noinst_HEADERS += src/util.h

|

noinst_HEADERS += src/util.h

|

||||||

|

noinst_HEADERS += src/scratch.h

|

||||||

|

noinst_HEADERS += src/scratch_impl.h

|

||||||

noinst_HEADERS += src/testrand.h

|

noinst_HEADERS += src/testrand.h

|

||||||

noinst_HEADERS += src/testrand_impl.h

|

noinst_HEADERS += src/testrand_impl.h

|

||||||

noinst_HEADERS += src/hash.h

|

noinst_HEADERS += src/hash.h

|

||||||

@ -79,7 +81,7 @@ libsecp256k1_jni_la_CPPFLAGS = -DSECP256K1_BUILD $(JNI_INCLUDES)

|

|||||||

|

|

||||||

noinst_PROGRAMS =

|

noinst_PROGRAMS =

|

||||||

if USE_BENCHMARK

|

if USE_BENCHMARK

|

||||||

noinst_PROGRAMS += bench_verify bench_sign bench_internal

|

noinst_PROGRAMS += bench_verify bench_sign bench_internal bench_ecmult

|

||||||

bench_verify_SOURCES = src/bench_verify.c

|

bench_verify_SOURCES = src/bench_verify.c

|

||||||

bench_verify_LDADD = libsecp256k1.la $(SECP_LIBS) $(SECP_TEST_LIBS) $(COMMON_LIB)

|

bench_verify_LDADD = libsecp256k1.la $(SECP_LIBS) $(SECP_TEST_LIBS) $(COMMON_LIB)

|

||||||

bench_sign_SOURCES = src/bench_sign.c

|

bench_sign_SOURCES = src/bench_sign.c

|

||||||

@ -87,6 +89,9 @@ bench_sign_LDADD = libsecp256k1.la $(SECP_LIBS) $(SECP_TEST_LIBS) $(COMMON_LIB)

|

|||||||

bench_internal_SOURCES = src/bench_internal.c

|

bench_internal_SOURCES = src/bench_internal.c

|

||||||

bench_internal_LDADD = $(SECP_LIBS) $(COMMON_LIB)

|

bench_internal_LDADD = $(SECP_LIBS) $(COMMON_LIB)

|

||||||

bench_internal_CPPFLAGS = -DSECP256K1_BUILD $(SECP_INCLUDES)

|

bench_internal_CPPFLAGS = -DSECP256K1_BUILD $(SECP_INCLUDES)

|

||||||

|

bench_ecmult_SOURCES = src/bench_ecmult.c

|

||||||

|

bench_ecmult_LDADD = $(SECP_LIBS) $(COMMON_LIB)

|

||||||

|

bench_ecmult_CPPFLAGS = -DSECP256K1_BUILD $(SECP_INCLUDES)

|

||||||

endif

|

endif

|

||||||

|

|

||||||

TESTS =

|

TESTS =

|

||||||

@ -159,6 +164,7 @@ $(gen_context_BIN): $(gen_context_OBJECTS)

|

|||||||

$(libsecp256k1_la_OBJECTS): src/ecmult_static_context.h

|

$(libsecp256k1_la_OBJECTS): src/ecmult_static_context.h

|

||||||

$(tests_OBJECTS): src/ecmult_static_context.h

|

$(tests_OBJECTS): src/ecmult_static_context.h

|

||||||

$(bench_internal_OBJECTS): src/ecmult_static_context.h

|

$(bench_internal_OBJECTS): src/ecmult_static_context.h

|

||||||

|

$(bench_ecmult_OBJECTS): src/ecmult_static_context.h

|

||||||

|

|

||||||

src/ecmult_static_context.h: $(gen_context_BIN)

|

src/ecmult_static_context.h: $(gen_context_BIN)

|

||||||

./$(gen_context_BIN)

|

./$(gen_context_BIN)

|

||||||

|

|||||||

@ -42,6 +42,19 @@ extern "C" {

|

|||||||

*/

|

*/

|

||||||

typedef struct secp256k1_context_struct secp256k1_context;

|

typedef struct secp256k1_context_struct secp256k1_context;

|

||||||

|

|

||||||

|

/** Opaque data structure that holds rewriteable "scratch space"

|

||||||

|

*

|

||||||

|

* The purpose of this structure is to replace dynamic memory allocations,

|

||||||

|

* because we target architectures where this may not be available. It is

|

||||||

|

* essentially a resizable (within specified parameters) block of bytes,

|

||||||

|

* which is initially created either by memory allocation or TODO as a pointer

|

||||||

|

* into some fixed rewritable space.

|

||||||

|

*

|

||||||

|

* Unlike the context object, this cannot safely be shared between threads

|

||||||

|

* without additional synchronization logic.

|

||||||

|

*/

|

||||||

|

typedef struct secp256k1_scratch_space_struct secp256k1_scratch_space;

|

||||||

|

|

||||||

/** Opaque data structure that holds a parsed and valid public key.

|

/** Opaque data structure that holds a parsed and valid public key.

|

||||||

*

|

*

|

||||||

* The exact representation of data inside is implementation defined and not

|

* The exact representation of data inside is implementation defined and not

|

||||||

@ -243,6 +256,28 @@ SECP256K1_API void secp256k1_context_set_error_callback(

|

|||||||

const void* data

|

const void* data

|

||||||

) SECP256K1_ARG_NONNULL(1);

|

) SECP256K1_ARG_NONNULL(1);

|

||||||

|

|

||||||

|

/** Create a secp256k1 scratch space object.

|

||||||

|

*

|

||||||

|

* Returns: a newly created scratch space.

|

||||||

|

* Args: ctx: an existing context object (cannot be NULL)

|

||||||

|

* In: init_size: initial amount of memory to allocate

|

||||||

|

* max_size: maximum amount of memory to allocate

|

||||||

|

*/

|

||||||

|

SECP256K1_API SECP256K1_WARN_UNUSED_RESULT secp256k1_scratch_space* secp256k1_scratch_space_create(

|

||||||

|

const secp256k1_context* ctx,

|

||||||

|

size_t init_size,

|

||||||

|

size_t max_size

|

||||||

|

) SECP256K1_ARG_NONNULL(1);

|

||||||

|

|

||||||

|

/** Destroy a secp256k1 scratch space.

|

||||||

|

*

|

||||||

|

* The pointer may not be used afterwards.

|

||||||

|

* Args: scratch: space to destroy

|

||||||

|

*/

|

||||||

|

SECP256K1_API void secp256k1_scratch_space_destroy(

|

||||||

|

secp256k1_scratch_space* scratch

|

||||||

|

);

|

||||||

|

|

||||||

/** Parse a variable-length public key into the pubkey object.

|

/** Parse a variable-length public key into the pubkey object.

|

||||||

*

|

*

|

||||||

* Returns: 1 if the public key was fully valid.

|

* Returns: 1 if the public key was fully valid.

|

||||||

|

|||||||

16

src/bench.h

16

src/bench.h

@ -8,6 +8,7 @@

|

|||||||

#define SECP256K1_BENCH_H

|

#define SECP256K1_BENCH_H

|

||||||

|

|

||||||

#include <stdio.h>

|

#include <stdio.h>

|

||||||

|

#include <string.h>

|

||||||

#include <math.h>

|

#include <math.h>

|

||||||

#include "sys/time.h"

|

#include "sys/time.h"

|

||||||

|

|

||||||

@ -63,4 +64,19 @@ void run_benchmark(char *name, void (*benchmark)(void*), void (*setup)(void*), v

|

|||||||

printf("us\n");

|

printf("us\n");

|

||||||

}

|

}

|

||||||

|

|

||||||

|

int have_flag(int argc, char** argv, char *flag) {

|

||||||

|

char** argm = argv + argc;

|

||||||

|

argv++;

|

||||||

|

if (argv == argm) {

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

while (argv != NULL && argv != argm) {

|

||||||

|

if (strcmp(*argv, flag) == 0) {

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

argv++;

|

||||||

|

}

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

|

||||||

#endif /* SECP256K1_BENCH_H */

|

#endif /* SECP256K1_BENCH_H */

|

||||||

|

|||||||

196

src/bench_ecmult.c

Normal file

196

src/bench_ecmult.c

Normal file

@ -0,0 +1,196 @@

|

|||||||

|

/**********************************************************************

|

||||||

|

* Copyright (c) 2017 Pieter Wuille *

|

||||||

|

* Distributed under the MIT software license, see the accompanying *

|

||||||

|

* file COPYING or http://www.opensource.org/licenses/mit-license.php.*

|

||||||

|

**********************************************************************/

|

||||||

|

#include <stdio.h>

|

||||||

|

|

||||||

|

#include "include/secp256k1.h"

|

||||||

|

|

||||||

|

#include "util.h"

|

||||||

|

#include "hash_impl.h"

|

||||||

|

#include "num_impl.h"

|

||||||

|

#include "field_impl.h"

|

||||||

|

#include "group_impl.h"

|

||||||

|

#include "scalar_impl.h"

|

||||||

|

#include "ecmult_impl.h"

|

||||||

|

#include "bench.h"

|

||||||

|

#include "secp256k1.c"

|

||||||

|

|

||||||

|

#define POINTS 32768

|

||||||

|

#define ITERS 10000

|

||||||

|

|

||||||

|

typedef struct {

|

||||||

|

/* Setup once in advance */

|

||||||

|

secp256k1_context* ctx;

|

||||||

|

secp256k1_scratch_space* scratch;

|

||||||

|

secp256k1_scalar* scalars;

|

||||||

|

secp256k1_ge* pubkeys;

|

||||||

|

secp256k1_scalar* seckeys;

|

||||||

|

secp256k1_gej* expected_output;

|

||||||

|

secp256k1_ecmult_multi_func ecmult_multi;

|

||||||

|

|

||||||

|

/* Changes per test */

|

||||||

|

size_t count;

|

||||||

|

int includes_g;

|

||||||

|

|

||||||

|

/* Changes per test iteration */

|

||||||

|

size_t offset1;

|

||||||

|

size_t offset2;

|

||||||

|

|

||||||

|

/* Test output. */

|

||||||

|

secp256k1_gej* output;

|

||||||

|

} bench_data;

|

||||||

|

|

||||||

|

static int bench_callback(secp256k1_scalar* sc, secp256k1_ge* ge, size_t idx, void* arg) {

|

||||||

|

bench_data* data = (bench_data*)arg;

|

||||||

|

if (data->includes_g) ++idx;

|

||||||

|

if (idx == 0) {

|

||||||

|

*sc = data->scalars[data->offset1];

|

||||||

|

*ge = secp256k1_ge_const_g;

|

||||||

|

} else {

|

||||||

|

*sc = data->scalars[(data->offset1 + idx) % POINTS];

|

||||||

|

*ge = data->pubkeys[(data->offset2 + idx - 1) % POINTS];

|

||||||

|

}

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

static void bench_ecmult(void* arg) {

|

||||||

|

bench_data* data = (bench_data*)arg;

|

||||||

|

|

||||||

|

size_t count = data->count;

|

||||||

|

int includes_g = data->includes_g;

|

||||||

|

size_t iters = 1 + ITERS / count;

|

||||||

|

size_t iter;

|

||||||

|

|

||||||

|

for (iter = 0; iter < iters; ++iter) {

|

||||||

|

data->ecmult_multi(&data->ctx->ecmult_ctx, data->scratch, &data->output[iter], data->includes_g ? &data->scalars[data->offset1] : NULL, bench_callback, arg, count - includes_g);

|

||||||

|

data->offset1 = (data->offset1 + count) % POINTS;

|

||||||

|

data->offset2 = (data->offset2 + count - 1) % POINTS;

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

static void bench_ecmult_setup(void* arg) {

|

||||||

|

bench_data* data = (bench_data*)arg;

|

||||||

|

data->offset1 = (data->count * 0x537b7f6f + 0x8f66a481) % POINTS;

|

||||||

|

data->offset2 = (data->count * 0x7f6f537b + 0x6a1a8f49) % POINTS;

|

||||||

|

}

|

||||||

|

|

||||||

|

static void bench_ecmult_teardown(void* arg) {

|

||||||

|

bench_data* data = (bench_data*)arg;

|

||||||

|

size_t iters = 1 + ITERS / data->count;

|

||||||

|

size_t iter;

|

||||||

|

/* Verify the results in teardown, to avoid doing comparisons while benchmarking. */

|

||||||

|

for (iter = 0; iter < iters; ++iter) {

|

||||||

|

secp256k1_gej tmp;

|

||||||

|

secp256k1_gej_add_var(&tmp, &data->output[iter], &data->expected_output[iter], NULL);

|

||||||

|

CHECK(secp256k1_gej_is_infinity(&tmp));

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

static void generate_scalar(uint32_t num, secp256k1_scalar* scalar) {

|

||||||

|

secp256k1_sha256 sha256;

|

||||||

|

unsigned char c[11] = {'e', 'c', 'm', 'u', 'l', 't', 0, 0, 0, 0};

|

||||||

|

unsigned char buf[32];

|

||||||

|

int overflow = 0;

|

||||||

|

c[6] = num;

|

||||||

|

c[7] = num >> 8;

|

||||||

|

c[8] = num >> 16;

|

||||||

|

c[9] = num >> 24;

|

||||||

|

secp256k1_sha256_initialize(&sha256);

|

||||||

|

secp256k1_sha256_write(&sha256, c, sizeof(c));

|

||||||

|

secp256k1_sha256_finalize(&sha256, buf);

|

||||||

|

secp256k1_scalar_set_b32(scalar, buf, &overflow);

|

||||||

|

CHECK(!overflow);

|

||||||

|

}

|

||||||

|

|

||||||

|

static void run_test(bench_data* data, size_t count, int includes_g) {

|

||||||

|

char str[32];

|

||||||

|

static const secp256k1_scalar zero = SECP256K1_SCALAR_CONST(0, 0, 0, 0, 0, 0, 0, 0);

|

||||||

|

size_t iters = 1 + ITERS / count;

|

||||||

|

size_t iter;

|

||||||

|

|

||||||

|

data->count = count;

|

||||||

|

data->includes_g = includes_g;

|

||||||

|

|

||||||

|

/* Compute (the negation of) the expected results directly. */

|

||||||

|

data->offset1 = (data->count * 0x537b7f6f + 0x8f66a481) % POINTS;

|

||||||

|

data->offset2 = (data->count * 0x7f6f537b + 0x6a1a8f49) % POINTS;

|

||||||

|

for (iter = 0; iter < iters; ++iter) {

|

||||||

|

secp256k1_scalar tmp;

|

||||||

|

secp256k1_scalar total = data->scalars[(data->offset1++) % POINTS];

|

||||||

|

size_t i = 0;

|

||||||

|

for (i = 0; i + 1 < count; ++i) {

|

||||||

|

secp256k1_scalar_mul(&tmp, &data->seckeys[(data->offset2++) % POINTS], &data->scalars[(data->offset1++) % POINTS]);

|

||||||

|

secp256k1_scalar_add(&total, &total, &tmp);

|

||||||

|

}

|

||||||

|

secp256k1_scalar_negate(&total, &total);

|

||||||

|

secp256k1_ecmult(&data->ctx->ecmult_ctx, &data->expected_output[iter], NULL, &zero, &total);

|

||||||

|

}

|

||||||

|

|

||||||

|

/* Run the benchmark. */

|

||||||

|

sprintf(str, includes_g ? "ecmult_%ig" : "ecmult_%i", (int)count);

|

||||||

|

run_benchmark(str, bench_ecmult, bench_ecmult_setup, bench_ecmult_teardown, data, 10, count * (1 + ITERS / count));

|

||||||

|

}

|

||||||

|

|

||||||

|

int main(int argc, char **argv) {

|

||||||

|

bench_data data;

|

||||||

|

int i, p;

|

||||||

|

secp256k1_gej* pubkeys_gej;

|

||||||

|

size_t scratch_size;

|

||||||

|

|

||||||

|

if (argc > 1) {

|

||||||

|

if(have_flag(argc, argv, "pippenger_wnaf")) {

|

||||||

|

printf("Using pippenger_wnaf:\n");

|

||||||

|

data.ecmult_multi = secp256k1_ecmult_pippenger_batch_single;

|

||||||

|

} else if(have_flag(argc, argv, "strauss_wnaf")) {

|

||||||

|

printf("Using strauss_wnaf:\n");

|

||||||

|

data.ecmult_multi = secp256k1_ecmult_strauss_batch_single;

|

||||||

|

}

|

||||||

|

} else {

|

||||||

|

data.ecmult_multi = secp256k1_ecmult_multi_var;

|

||||||

|

}

|

||||||

|

|

||||||

|

/* Allocate stuff */

|

||||||

|

data.ctx = secp256k1_context_create(SECP256K1_CONTEXT_SIGN | SECP256K1_CONTEXT_VERIFY);

|

||||||

|

scratch_size = secp256k1_strauss_scratch_size(POINTS) + STRAUSS_SCRATCH_OBJECTS*16;

|

||||||

|

data.scratch = secp256k1_scratch_space_create(data.ctx, scratch_size, scratch_size);

|

||||||

|

data.scalars = malloc(sizeof(secp256k1_scalar) * POINTS);

|

||||||

|

data.seckeys = malloc(sizeof(secp256k1_scalar) * POINTS);

|

||||||

|

data.pubkeys = malloc(sizeof(secp256k1_ge) * POINTS);

|

||||||

|

data.expected_output = malloc(sizeof(secp256k1_gej) * (ITERS + 1));

|

||||||

|

data.output = malloc(sizeof(secp256k1_gej) * (ITERS + 1));

|

||||||

|

|

||||||

|

/* Generate a set of scalars, and private/public keypairs. */

|

||||||

|

pubkeys_gej = malloc(sizeof(secp256k1_gej) * POINTS);

|

||||||

|

secp256k1_gej_set_ge(&pubkeys_gej[0], &secp256k1_ge_const_g);

|

||||||

|

secp256k1_scalar_set_int(&data.seckeys[0], 1);

|

||||||

|

for (i = 0; i < POINTS; ++i) {

|

||||||

|

generate_scalar(i, &data.scalars[i]);

|

||||||

|

if (i) {

|

||||||

|

secp256k1_gej_double_var(&pubkeys_gej[i], &pubkeys_gej[i - 1], NULL);

|

||||||

|

secp256k1_scalar_add(&data.seckeys[i], &data.seckeys[i - 1], &data.seckeys[i - 1]);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

secp256k1_ge_set_all_gej_var(data.pubkeys, pubkeys_gej, POINTS, &data.ctx->error_callback);

|

||||||

|

free(pubkeys_gej);

|

||||||

|

|

||||||

|

for (i = 1; i <= 8; ++i) {

|

||||||

|

run_test(&data, i, 1);

|

||||||

|

}

|

||||||

|

|

||||||

|

for (p = 0; p <= 11; ++p) {

|

||||||

|

for (i = 9; i <= 16; ++i) {

|

||||||

|

run_test(&data, i << p, 1);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

secp256k1_context_destroy(data.ctx);

|

||||||

|

secp256k1_scratch_space_destroy(data.scratch);

|

||||||

|

free(data.scalars);

|

||||||

|

free(data.pubkeys);

|

||||||

|

free(data.seckeys);

|

||||||

|

free(data.output);

|

||||||

|

free(data.expected_output);

|

||||||

|

|

||||||

|

return(0);

|

||||||

|

}

|

||||||

@ -324,21 +324,6 @@ void bench_num_jacobi(void* arg) {

|

|||||||

}

|

}

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

int have_flag(int argc, char** argv, char *flag) {

|

|

||||||

char** argm = argv + argc;

|

|

||||||

argv++;

|

|

||||||

if (argv == argm) {

|

|

||||||

return 1;

|

|

||||||

}

|

|

||||||

while (argv != NULL && argv != argm) {

|

|

||||||

if (strcmp(*argv, flag) == 0) {

|

|

||||||

return 1;

|

|

||||||

}

|

|

||||||

argv++;

|

|

||||||

}

|

|

||||||

return 0;

|

|

||||||

}

|

|

||||||

|

|

||||||

int main(int argc, char **argv) {

|

int main(int argc, char **argv) {

|

||||||

bench_inv data;

|

bench_inv data;

|

||||||

if (have_flag(argc, argv, "scalar") || have_flag(argc, argv, "add")) run_benchmark("scalar_add", bench_scalar_add, bench_setup, NULL, &data, 10, 2000000);

|

if (have_flag(argc, argv, "scalar") || have_flag(argc, argv, "add")) run_benchmark("scalar_add", bench_scalar_add, bench_setup, NULL, &data, 10, 2000000);

|

||||||

|

|||||||

18

src/ecmult.h

18

src/ecmult.h

@ -1,5 +1,5 @@

|

|||||||

/**********************************************************************

|

/**********************************************************************

|

||||||

* Copyright (c) 2013, 2014 Pieter Wuille *

|

* Copyright (c) 2013, 2014, 2017 Pieter Wuille, Andrew Poelstra *

|

||||||

* Distributed under the MIT software license, see the accompanying *

|

* Distributed under the MIT software license, see the accompanying *

|

||||||

* file COPYING or http://www.opensource.org/licenses/mit-license.php.*

|

* file COPYING or http://www.opensource.org/licenses/mit-license.php.*

|

||||||

**********************************************************************/

|

**********************************************************************/

|

||||||

@ -9,6 +9,8 @@

|

|||||||

|

|

||||||

#include "num.h"

|

#include "num.h"

|

||||||

#include "group.h"

|

#include "group.h"

|

||||||

|

#include "scalar.h"

|

||||||

|

#include "scratch.h"

|

||||||

|

|

||||||

typedef struct {

|

typedef struct {

|

||||||

/* For accelerating the computation of a*P + b*G: */

|

/* For accelerating the computation of a*P + b*G: */

|

||||||

@ -28,4 +30,18 @@ static int secp256k1_ecmult_context_is_built(const secp256k1_ecmult_context *ctx

|

|||||||

/** Double multiply: R = na*A + ng*G */

|

/** Double multiply: R = na*A + ng*G */

|

||||||

static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej *r, const secp256k1_gej *a, const secp256k1_scalar *na, const secp256k1_scalar *ng);

|

static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej *r, const secp256k1_gej *a, const secp256k1_scalar *na, const secp256k1_scalar *ng);

|

||||||

|

|

||||||

|

typedef int (secp256k1_ecmult_multi_callback)(secp256k1_scalar *sc, secp256k1_ge *pt, size_t idx, void *data);

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Multi-multiply: R = inp_g_sc * G + sum_i ni * Ai.

|

||||||

|

* Chooses the right algorithm for a given number of points and scratch space

|

||||||

|

* size. Resets and overwrites the given scratch space. If the points do not

|

||||||

|

* fit in the scratch space the algorithm is repeatedly run with batches of

|

||||||

|

* points.

|

||||||

|

* Returns: 1 on success (including when inp_g_sc is NULL and n is 0)

|

||||||

|

* 0 if there is not enough scratch space for a single point or

|

||||||

|

* callback returns 0

|

||||||

|

*/

|

||||||

|

static int secp256k1_ecmult_multi_var(const secp256k1_ecmult_context *ctx, secp256k1_scratch *scratch, secp256k1_gej *r, const secp256k1_scalar *inp_g_sc, secp256k1_ecmult_multi_callback cb, void *cbdata, size_t n);

|

||||||

|

|

||||||

#endif /* SECP256K1_ECMULT_H */

|

#endif /* SECP256K1_ECMULT_H */

|

||||||

|

|||||||

@ -12,13 +12,6 @@

|

|||||||

#include "ecmult_const.h"

|

#include "ecmult_const.h"

|

||||||

#include "ecmult_impl.h"

|

#include "ecmult_impl.h"

|

||||||

|

|

||||||

#ifdef USE_ENDOMORPHISM

|

|

||||||

#define WNAF_BITS 128

|

|

||||||

#else

|

|

||||||

#define WNAF_BITS 256

|

|

||||||

#endif

|

|

||||||

#define WNAF_SIZE(w) ((WNAF_BITS + (w) - 1) / (w))

|

|

||||||

|

|

||||||

/* This is like `ECMULT_TABLE_GET_GE` but is constant time */

|

/* This is like `ECMULT_TABLE_GET_GE` but is constant time */

|

||||||

#define ECMULT_CONST_TABLE_GET_GE(r,pre,n,w) do { \

|

#define ECMULT_CONST_TABLE_GET_GE(r,pre,n,w) do { \

|

||||||

int m; \

|

int m; \

|

||||||

|

|||||||

@ -1,13 +1,14 @@

|

|||||||

/**********************************************************************

|

/*****************************************************************************

|

||||||

* Copyright (c) 2013, 2014 Pieter Wuille *

|

* Copyright (c) 2013, 2014, 2017 Pieter Wuille, Andrew Poelstra, Jonas Nick *

|

||||||

* Distributed under the MIT software license, see the accompanying *

|

* Distributed under the MIT software license, see the accompanying *

|

||||||

* file COPYING or http://www.opensource.org/licenses/mit-license.php.*

|

* file COPYING or http://www.opensource.org/licenses/mit-license.php. *

|

||||||

**********************************************************************/

|

*****************************************************************************/

|

||||||

|

|

||||||

#ifndef SECP256K1_ECMULT_IMPL_H

|

#ifndef SECP256K1_ECMULT_IMPL_H

|

||||||

#define SECP256K1_ECMULT_IMPL_H

|

#define SECP256K1_ECMULT_IMPL_H

|

||||||

|

|

||||||

#include <string.h>

|

#include <string.h>

|

||||||

|

#include <stdint.h>

|

||||||

|

|

||||||

#include "group.h"

|

#include "group.h"

|

||||||

#include "scalar.h"

|

#include "scalar.h"

|

||||||

@ -41,9 +42,35 @@

|

|||||||

#endif

|

#endif

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

#define WNAF_BITS 128

|

||||||

|

#else

|

||||||

|

#define WNAF_BITS 256

|

||||||

|

#endif

|

||||||

|

#define WNAF_SIZE(w) ((WNAF_BITS + (w) - 1) / (w))

|

||||||

|

|

||||||

/** The number of entries a table with precomputed multiples needs to have. */

|

/** The number of entries a table with precomputed multiples needs to have. */

|

||||||

#define ECMULT_TABLE_SIZE(w) (1 << ((w)-2))

|

#define ECMULT_TABLE_SIZE(w) (1 << ((w)-2))

|

||||||

|

|

||||||

|

/* The number of objects allocated on the scratch space for ecmult_multi algorithms */

|

||||||

|

#define PIPPENGER_SCRATCH_OBJECTS 6

|

||||||

|

#define STRAUSS_SCRATCH_OBJECTS 6

|

||||||

|

|

||||||

|

#define PIPPENGER_MAX_BUCKET_WINDOW 12

|

||||||

|

|

||||||

|

/* Minimum number of points for which pippenger_wnaf is faster than strauss wnaf */

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

#define ECMULT_PIPPENGER_THRESHOLD 88

|

||||||

|

#else

|

||||||

|

#define ECMULT_PIPPENGER_THRESHOLD 160

|

||||||

|

#endif

|

||||||

|

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

#define ECMULT_MAX_POINTS_PER_BATCH 5000000

|

||||||

|

#else

|

||||||

|

#define ECMULT_MAX_POINTS_PER_BATCH 10000000

|

||||||

|

#endif

|

||||||

|

|

||||||

/** Fill a table 'prej' with precomputed odd multiples of a. Prej will contain

|

/** Fill a table 'prej' with precomputed odd multiples of a. Prej will contain

|

||||||

* the values [1*a,3*a,...,(2*n-1)*a], so it space for n values. zr[0] will

|

* the values [1*a,3*a,...,(2*n-1)*a], so it space for n values. zr[0] will

|

||||||

* contain prej[0].z / a.z. The other zr[i] values = prej[i].z / prej[i-1].z.

|

* contain prej[0].z / a.z. The other zr[i] values = prej[i].z / prej[i-1].z.

|

||||||

@ -283,50 +310,78 @@ static int secp256k1_ecmult_wnaf(int *wnaf, int len, const secp256k1_scalar *a,

|

|||||||

return last_set_bit + 1;

|

return last_set_bit + 1;

|

||||||

}

|

}

|

||||||

|

|

||||||

static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej *r, const secp256k1_gej *a, const secp256k1_scalar *na, const secp256k1_scalar *ng) {

|

struct secp256k1_strauss_point_state {

|

||||||

secp256k1_ge pre_a[ECMULT_TABLE_SIZE(WINDOW_A)];

|

|

||||||

secp256k1_ge tmpa;

|

|

||||||

secp256k1_fe Z;

|

|

||||||

#ifdef USE_ENDOMORPHISM

|

#ifdef USE_ENDOMORPHISM

|

||||||

secp256k1_ge pre_a_lam[ECMULT_TABLE_SIZE(WINDOW_A)];

|

|

||||||

secp256k1_scalar na_1, na_lam;

|

secp256k1_scalar na_1, na_lam;

|

||||||

/* Splitted G factors. */

|

|

||||||

secp256k1_scalar ng_1, ng_128;

|

|

||||||

int wnaf_na_1[130];

|

int wnaf_na_1[130];

|

||||||

int wnaf_na_lam[130];

|

int wnaf_na_lam[130];

|

||||||

int bits_na_1;

|

int bits_na_1;

|

||||||

int bits_na_lam;

|

int bits_na_lam;

|

||||||

int wnaf_ng_1[129];

|

|

||||||

int bits_ng_1;

|

|

||||||

int wnaf_ng_128[129];

|

|

||||||

int bits_ng_128;

|

|

||||||

#else

|

#else

|

||||||

int wnaf_na[256];

|

int wnaf_na[256];

|

||||||

int bits_na;

|

int bits_na;

|

||||||

|

#endif

|

||||||

|

size_t input_pos;

|

||||||

|

};

|

||||||

|

|

||||||

|

struct secp256k1_strauss_state {

|

||||||

|

secp256k1_gej* prej;

|

||||||

|

secp256k1_fe* zr;

|

||||||

|

secp256k1_ge* pre_a;

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

secp256k1_ge* pre_a_lam;

|

||||||

|

#endif

|

||||||

|

struct secp256k1_strauss_point_state* ps;

|

||||||

|

};

|

||||||

|

|

||||||

|

static void secp256k1_ecmult_strauss_wnaf(const secp256k1_ecmult_context *ctx, const struct secp256k1_strauss_state *state, secp256k1_gej *r, int num, const secp256k1_gej *a, const secp256k1_scalar *na, const secp256k1_scalar *ng) {

|

||||||

|

secp256k1_ge tmpa;

|

||||||

|

secp256k1_fe Z;

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

/* Splitted G factors. */

|

||||||

|

secp256k1_scalar ng_1, ng_128;

|

||||||

|

int wnaf_ng_1[129];

|

||||||

|

int bits_ng_1 = 0;

|

||||||

|

int wnaf_ng_128[129];

|

||||||

|

int bits_ng_128 = 0;

|

||||||

|

#else

|

||||||

int wnaf_ng[256];

|

int wnaf_ng[256];

|

||||||

int bits_ng;

|

int bits_ng = 0;

|

||||||

#endif

|

#endif

|

||||||

int i;

|

int i;

|

||||||

int bits;

|

int bits = 0;

|

||||||

|

int np;

|

||||||

|

int no = 0;

|

||||||

|

|

||||||

|

for (np = 0; np < num; ++np) {

|

||||||

|

if (secp256k1_scalar_is_zero(&na[np]) || secp256k1_gej_is_infinity(&a[np])) {

|

||||||

|

continue;

|

||||||

|

}

|

||||||

|

state->ps[no].input_pos = np;

|

||||||

#ifdef USE_ENDOMORPHISM

|

#ifdef USE_ENDOMORPHISM

|

||||||

/* split na into na_1 and na_lam (where na = na_1 + na_lam*lambda, and na_1 and na_lam are ~128 bit) */

|

/* split na into na_1 and na_lam (where na = na_1 + na_lam*lambda, and na_1 and na_lam are ~128 bit) */

|

||||||

secp256k1_scalar_split_lambda(&na_1, &na_lam, na);

|

secp256k1_scalar_split_lambda(&state->ps[no].na_1, &state->ps[no].na_lam, &na[np]);

|

||||||

|

|

||||||

/* build wnaf representation for na_1 and na_lam. */

|

/* build wnaf representation for na_1 and na_lam. */

|

||||||

bits_na_1 = secp256k1_ecmult_wnaf(wnaf_na_1, 130, &na_1, WINDOW_A);

|

state->ps[no].bits_na_1 = secp256k1_ecmult_wnaf(state->ps[no].wnaf_na_1, 130, &state->ps[no].na_1, WINDOW_A);

|

||||||

bits_na_lam = secp256k1_ecmult_wnaf(wnaf_na_lam, 130, &na_lam, WINDOW_A);

|

state->ps[no].bits_na_lam = secp256k1_ecmult_wnaf(state->ps[no].wnaf_na_lam, 130, &state->ps[no].na_lam, WINDOW_A);

|

||||||

VERIFY_CHECK(bits_na_1 <= 130);

|

VERIFY_CHECK(state->ps[no].bits_na_1 <= 130);

|

||||||

VERIFY_CHECK(bits_na_lam <= 130);

|

VERIFY_CHECK(state->ps[no].bits_na_lam <= 130);

|

||||||

bits = bits_na_1;

|

if (state->ps[no].bits_na_1 > bits) {

|

||||||

if (bits_na_lam > bits) {

|

bits = state->ps[no].bits_na_1;

|

||||||

bits = bits_na_lam;

|

}

|

||||||

}

|

if (state->ps[no].bits_na_lam > bits) {

|

||||||

|

bits = state->ps[no].bits_na_lam;

|

||||||

|

}

|

||||||

#else

|

#else

|

||||||

/* build wnaf representation for na. */

|

/* build wnaf representation for na. */

|

||||||

bits_na = secp256k1_ecmult_wnaf(wnaf_na, 256, na, WINDOW_A);

|

state->ps[no].bits_na = secp256k1_ecmult_wnaf(state->ps[no].wnaf_na, 256, &na[np], WINDOW_A);

|

||||||

bits = bits_na;

|

if (state->ps[no].bits_na > bits) {

|

||||||

|

bits = state->ps[no].bits_na;

|

||||||

|

}

|

||||||

#endif

|

#endif

|

||||||

|

++no;

|

||||||

|

}

|

||||||

|

|

||||||

/* Calculate odd multiples of a.

|

/* Calculate odd multiples of a.

|

||||||

* All multiples are brought to the same Z 'denominator', which is stored

|

* All multiples are brought to the same Z 'denominator', which is stored

|

||||||

@ -338,29 +393,51 @@ static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej

|

|||||||

* of 1/Z, so we can use secp256k1_gej_add_zinv_var, which uses the same

|

* of 1/Z, so we can use secp256k1_gej_add_zinv_var, which uses the same

|

||||||

* isomorphism to efficiently add with a known Z inverse.

|

* isomorphism to efficiently add with a known Z inverse.

|

||||||

*/

|

*/

|

||||||

secp256k1_ecmult_odd_multiples_table_globalz_windowa(pre_a, &Z, a);

|

if (no > 0) {

|

||||||

|

/* Compute the odd multiples in Jacobian form. */

|

||||||

|

secp256k1_ecmult_odd_multiples_table(ECMULT_TABLE_SIZE(WINDOW_A), state->prej, state->zr, &a[state->ps[0].input_pos]);

|

||||||

|

for (np = 1; np < no; ++np) {

|

||||||

|

secp256k1_gej tmp = a[state->ps[np].input_pos];

|

||||||

|

#ifdef VERIFY

|

||||||

|

secp256k1_fe_normalize_var(&(state->prej[(np - 1) * ECMULT_TABLE_SIZE(WINDOW_A) + ECMULT_TABLE_SIZE(WINDOW_A) - 1].z));

|

||||||

|

#endif

|

||||||

|

secp256k1_gej_rescale(&tmp, &(state->prej[(np - 1) * ECMULT_TABLE_SIZE(WINDOW_A) + ECMULT_TABLE_SIZE(WINDOW_A) - 1].z));

|

||||||

|

secp256k1_ecmult_odd_multiples_table(ECMULT_TABLE_SIZE(WINDOW_A), state->prej + np * ECMULT_TABLE_SIZE(WINDOW_A), state->zr + np * ECMULT_TABLE_SIZE(WINDOW_A), &tmp);

|

||||||

|

secp256k1_fe_mul(state->zr + np * ECMULT_TABLE_SIZE(WINDOW_A), state->zr + np * ECMULT_TABLE_SIZE(WINDOW_A), &(a[state->ps[np].input_pos].z));

|

||||||

|

}

|

||||||

|

/* Bring them to the same Z denominator. */

|

||||||

|

secp256k1_ge_globalz_set_table_gej(ECMULT_TABLE_SIZE(WINDOW_A) * no, state->pre_a, &Z, state->prej, state->zr);

|

||||||

|

} else {

|

||||||

|

secp256k1_fe_set_int(&Z, 1);

|

||||||

|

}

|

||||||

|

|

||||||

#ifdef USE_ENDOMORPHISM

|

#ifdef USE_ENDOMORPHISM

|

||||||

for (i = 0; i < ECMULT_TABLE_SIZE(WINDOW_A); i++) {

|

for (np = 0; np < no; ++np) {

|

||||||

secp256k1_ge_mul_lambda(&pre_a_lam[i], &pre_a[i]);

|

for (i = 0; i < ECMULT_TABLE_SIZE(WINDOW_A); i++) {

|

||||||

|

secp256k1_ge_mul_lambda(&state->pre_a_lam[np * ECMULT_TABLE_SIZE(WINDOW_A) + i], &state->pre_a[np * ECMULT_TABLE_SIZE(WINDOW_A) + i]);

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

/* split ng into ng_1 and ng_128 (where gn = gn_1 + gn_128*2^128, and gn_1 and gn_128 are ~128 bit) */

|

if (ng) {

|

||||||

secp256k1_scalar_split_128(&ng_1, &ng_128, ng);

|

/* split ng into ng_1 and ng_128 (where gn = gn_1 + gn_128*2^128, and gn_1 and gn_128 are ~128 bit) */

|

||||||

|

secp256k1_scalar_split_128(&ng_1, &ng_128, ng);

|

||||||

|

|

||||||

/* Build wnaf representation for ng_1 and ng_128 */

|

/* Build wnaf representation for ng_1 and ng_128 */

|

||||||

bits_ng_1 = secp256k1_ecmult_wnaf(wnaf_ng_1, 129, &ng_1, WINDOW_G);

|

bits_ng_1 = secp256k1_ecmult_wnaf(wnaf_ng_1, 129, &ng_1, WINDOW_G);

|

||||||

bits_ng_128 = secp256k1_ecmult_wnaf(wnaf_ng_128, 129, &ng_128, WINDOW_G);

|

bits_ng_128 = secp256k1_ecmult_wnaf(wnaf_ng_128, 129, &ng_128, WINDOW_G);

|

||||||

if (bits_ng_1 > bits) {

|

if (bits_ng_1 > bits) {

|

||||||

bits = bits_ng_1;

|

bits = bits_ng_1;

|

||||||

}

|

}

|

||||||

if (bits_ng_128 > bits) {

|

if (bits_ng_128 > bits) {

|

||||||

bits = bits_ng_128;

|

bits = bits_ng_128;

|

||||||

|

}

|

||||||

}

|

}

|

||||||

#else

|

#else

|

||||||

bits_ng = secp256k1_ecmult_wnaf(wnaf_ng, 256, ng, WINDOW_G);

|

if (ng) {

|

||||||

if (bits_ng > bits) {

|

bits_ng = secp256k1_ecmult_wnaf(wnaf_ng, 256, ng, WINDOW_G);

|

||||||

bits = bits_ng;

|

if (bits_ng > bits) {

|

||||||

|

bits = bits_ng;

|

||||||

|

}

|

||||||

}

|

}

|

||||||

#endif

|

#endif

|

||||||

|

|

||||||

@ -370,13 +447,15 @@ static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej

|

|||||||

int n;

|

int n;

|

||||||

secp256k1_gej_double_var(r, r, NULL);

|

secp256k1_gej_double_var(r, r, NULL);

|

||||||

#ifdef USE_ENDOMORPHISM

|

#ifdef USE_ENDOMORPHISM

|

||||||

if (i < bits_na_1 && (n = wnaf_na_1[i])) {

|

for (np = 0; np < no; ++np) {

|

||||||

ECMULT_TABLE_GET_GE(&tmpa, pre_a, n, WINDOW_A);

|

if (i < state->ps[np].bits_na_1 && (n = state->ps[np].wnaf_na_1[i])) {

|

||||||

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

ECMULT_TABLE_GET_GE(&tmpa, state->pre_a + np * ECMULT_TABLE_SIZE(WINDOW_A), n, WINDOW_A);

|

||||||

}

|

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

||||||

if (i < bits_na_lam && (n = wnaf_na_lam[i])) {

|

}

|

||||||

ECMULT_TABLE_GET_GE(&tmpa, pre_a_lam, n, WINDOW_A);

|

if (i < state->ps[np].bits_na_lam && (n = state->ps[np].wnaf_na_lam[i])) {

|

||||||

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

ECMULT_TABLE_GET_GE(&tmpa, state->pre_a_lam + np * ECMULT_TABLE_SIZE(WINDOW_A), n, WINDOW_A);

|

||||||

|

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

||||||

|

}

|

||||||

}

|

}

|

||||||

if (i < bits_ng_1 && (n = wnaf_ng_1[i])) {

|

if (i < bits_ng_1 && (n = wnaf_ng_1[i])) {

|

||||||

ECMULT_TABLE_GET_GE_STORAGE(&tmpa, *ctx->pre_g, n, WINDOW_G);

|

ECMULT_TABLE_GET_GE_STORAGE(&tmpa, *ctx->pre_g, n, WINDOW_G);

|

||||||

@ -387,9 +466,11 @@ static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej

|

|||||||

secp256k1_gej_add_zinv_var(r, r, &tmpa, &Z);

|

secp256k1_gej_add_zinv_var(r, r, &tmpa, &Z);

|

||||||

}

|

}

|

||||||

#else

|

#else

|

||||||

if (i < bits_na && (n = wnaf_na[i])) {

|

for (np = 0; np < no; ++np) {

|

||||||

ECMULT_TABLE_GET_GE(&tmpa, pre_a, n, WINDOW_A);

|

if (i < state->ps[np].bits_na && (n = state->ps[np].wnaf_na[i])) {

|

||||||

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

ECMULT_TABLE_GET_GE(&tmpa, state->pre_a + np * ECMULT_TABLE_SIZE(WINDOW_A), n, WINDOW_A);

|

||||||

|

secp256k1_gej_add_ge_var(r, r, &tmpa, NULL);

|

||||||

|

}

|

||||||

}

|

}

|

||||||

if (i < bits_ng && (n = wnaf_ng[i])) {

|

if (i < bits_ng && (n = wnaf_ng[i])) {

|

||||||

ECMULT_TABLE_GET_GE_STORAGE(&tmpa, *ctx->pre_g, n, WINDOW_G);

|

ECMULT_TABLE_GET_GE_STORAGE(&tmpa, *ctx->pre_g, n, WINDOW_G);

|

||||||

@ -403,4 +484,528 @@ static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

static void secp256k1_ecmult(const secp256k1_ecmult_context *ctx, secp256k1_gej *r, const secp256k1_gej *a, const secp256k1_scalar *na, const secp256k1_scalar *ng) {

|

||||||

|

secp256k1_gej prej[ECMULT_TABLE_SIZE(WINDOW_A)];

|

||||||

|

secp256k1_fe zr[ECMULT_TABLE_SIZE(WINDOW_A)];

|

||||||

|

secp256k1_ge pre_a[ECMULT_TABLE_SIZE(WINDOW_A)];

|

||||||

|

struct secp256k1_strauss_point_state ps[1];

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

secp256k1_ge pre_a_lam[ECMULT_TABLE_SIZE(WINDOW_A)];

|

||||||

|

#endif

|

||||||

|

struct secp256k1_strauss_state state;

|

||||||

|

|

||||||

|

state.prej = prej;

|

||||||

|

state.zr = zr;

|

||||||

|

state.pre_a = pre_a;

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

state.pre_a_lam = pre_a_lam;

|

||||||

|

#endif

|

||||||

|

state.ps = ps;

|

||||||

|

secp256k1_ecmult_strauss_wnaf(ctx, &state, r, 1, a, na, ng);

|

||||||

|

}

|

||||||

|

|

||||||

|

static size_t secp256k1_strauss_scratch_size(size_t n_points) {

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

static const size_t point_size = (2 * sizeof(secp256k1_ge) + sizeof(secp256k1_gej) + sizeof(secp256k1_fe)) * ECMULT_TABLE_SIZE(WINDOW_A) + sizeof(struct secp256k1_strauss_point_state) + sizeof(secp256k1_gej) + sizeof(secp256k1_scalar);

|

||||||

|

#else

|

||||||

|

static const size_t point_size = (sizeof(secp256k1_ge) + sizeof(secp256k1_gej) + sizeof(secp256k1_fe)) * ECMULT_TABLE_SIZE(WINDOW_A) + sizeof(struct secp256k1_strauss_point_state) + sizeof(secp256k1_gej) + sizeof(secp256k1_scalar);

|

||||||

|

#endif

|

||||||

|

return n_points*point_size;

|

||||||

|

}

|

||||||

|

|

||||||

|

static int secp256k1_ecmult_strauss_batch(const secp256k1_ecmult_context *ctx, secp256k1_scratch *scratch, secp256k1_gej *r, const secp256k1_scalar *inp_g_sc, secp256k1_ecmult_multi_callback cb, void *cbdata, size_t n_points, size_t cb_offset) {

|

||||||

|

secp256k1_gej* points;

|

||||||

|

secp256k1_scalar* scalars;

|

||||||

|

struct secp256k1_strauss_state state;

|

||||||

|

size_t i;

|

||||||

|

|

||||||

|

secp256k1_gej_set_infinity(r);

|

||||||

|

if (inp_g_sc == NULL && n_points == 0) {

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

if (!secp256k1_scratch_resize(scratch, secp256k1_strauss_scratch_size(n_points), STRAUSS_SCRATCH_OBJECTS)) {

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

secp256k1_scratch_reset(scratch);

|

||||||

|

points = (secp256k1_gej*)secp256k1_scratch_alloc(scratch, n_points * sizeof(secp256k1_gej));

|

||||||

|

scalars = (secp256k1_scalar*)secp256k1_scratch_alloc(scratch, n_points * sizeof(secp256k1_scalar));

|

||||||

|

state.prej = (secp256k1_gej*)secp256k1_scratch_alloc(scratch, n_points * ECMULT_TABLE_SIZE(WINDOW_A) * sizeof(secp256k1_gej));

|

||||||

|

state.zr = (secp256k1_fe*)secp256k1_scratch_alloc(scratch, n_points * ECMULT_TABLE_SIZE(WINDOW_A) * sizeof(secp256k1_fe));

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

state.pre_a = (secp256k1_ge*)secp256k1_scratch_alloc(scratch, n_points * 2 * ECMULT_TABLE_SIZE(WINDOW_A) * sizeof(secp256k1_ge));

|

||||||

|

state.pre_a_lam = state.pre_a + n_points * ECMULT_TABLE_SIZE(WINDOW_A);

|

||||||

|

#else

|

||||||

|

state.pre_a = (secp256k1_ge*)secp256k1_scratch_alloc(scratch, n_points * ECMULT_TABLE_SIZE(WINDOW_A) * sizeof(secp256k1_ge));

|

||||||

|

#endif

|

||||||

|

state.ps = (struct secp256k1_strauss_point_state*)secp256k1_scratch_alloc(scratch, n_points * sizeof(struct secp256k1_strauss_point_state));

|

||||||

|

|

||||||

|

for (i = 0; i < n_points; i++) {

|

||||||

|

secp256k1_ge point;

|

||||||

|

if (!cb(&scalars[i], &point, i+cb_offset, cbdata)) return 0;

|

||||||

|

secp256k1_gej_set_ge(&points[i], &point);

|

||||||

|

}

|

||||||

|

secp256k1_ecmult_strauss_wnaf(ctx, &state, r, n_points, points, scalars, inp_g_sc);

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

/* Wrapper for secp256k1_ecmult_multi_func interface */

|

||||||

|

static int secp256k1_ecmult_strauss_batch_single(const secp256k1_ecmult_context *actx, secp256k1_scratch *scratch, secp256k1_gej *r, const secp256k1_scalar *inp_g_sc, secp256k1_ecmult_multi_callback cb, void *cbdata, size_t n) {

|

||||||

|

return secp256k1_ecmult_strauss_batch(actx, scratch, r, inp_g_sc, cb, cbdata, n, 0);

|

||||||

|

}

|

||||||

|

|

||||||

|

static size_t secp256k1_strauss_max_points(secp256k1_scratch *scratch) {

|

||||||

|

return secp256k1_scratch_max_allocation(scratch, STRAUSS_SCRATCH_OBJECTS) / secp256k1_strauss_scratch_size(1);

|

||||||

|

}

|

||||||

|

|

||||||

|

/** Convert a number to WNAF notation.

|

||||||

|

* The number becomes represented by sum(2^{wi} * wnaf[i], i=0..WNAF_SIZE(w)+1) - return_val.

|

||||||

|

* It has the following guarantees:

|

||||||

|

* - each wnaf[i] is either 0 or an odd integer between -(1 << w) and (1 << w)

|

||||||

|

* - the number of words set is always WNAF_SIZE(w)

|

||||||

|

* - the returned skew is 0 without endomorphism, or 0 or 1 with endomorphism

|

||||||

|

*/

|

||||||

|

static int secp256k1_wnaf_fixed(int *wnaf, const secp256k1_scalar *s, int w) {

|

||||||

|

int sign = 0;

|

||||||

|

int skew = 0;

|

||||||

|

int pos = 1;

|

||||||

|

#ifndef USE_ENDOMORPHISM

|

||||||

|

secp256k1_scalar neg_s;

|

||||||

|

#endif

|

||||||

|

const secp256k1_scalar *work = s;

|

||||||

|

|

||||||

|

if (secp256k1_scalar_is_zero(s)) {

|

||||||

|

while (pos * w < WNAF_BITS) {

|

||||||

|

wnaf[pos] = 0;

|

||||||

|

++pos;

|

||||||

|

}

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

|

||||||

|

if (secp256k1_scalar_is_even(s)) {

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

skew = 1;

|

||||||

|

#else

|

||||||

|

secp256k1_scalar_negate(&neg_s, s);

|

||||||

|

work = &neg_s;

|

||||||

|

sign = -1;

|

||||||

|

#endif

|

||||||

|

}

|

||||||

|

|

||||||

|

wnaf[0] = (secp256k1_scalar_get_bits_var(work, 0, w) + skew + sign) ^ sign;

|

||||||

|

|

||||||

|

while (pos * w < WNAF_BITS) {

|

||||||

|

int now = w;

|

||||||

|

int val;

|

||||||

|

if (now + pos * w > WNAF_BITS) {

|

||||||

|

now = WNAF_BITS - pos * w;

|

||||||

|

}

|

||||||

|

val = secp256k1_scalar_get_bits_var(work, pos * w, now);

|

||||||

|

if ((val & 1) == 0) {

|

||||||

|

wnaf[pos - 1] -= ((1 << w) + sign) ^ sign;

|

||||||

|

wnaf[pos] = (val + 1 + sign) ^ sign;

|

||||||

|

} else {

|

||||||

|

wnaf[pos] = (val + sign) ^ sign;

|

||||||

|

}

|

||||||

|

++pos;

|

||||||

|

}

|

||||||

|

VERIFY_CHECK(pos == WNAF_SIZE(w));

|

||||||

|

|

||||||

|

return skew;

|

||||||

|

}

|

||||||

|

|

||||||

|

struct secp256k1_pippenger_point_state {

|

||||||

|

int skew_na;

|

||||||

|

size_t input_pos;

|

||||||

|

};

|

||||||

|

|

||||||

|

struct secp256k1_pippenger_state {

|

||||||

|

int *wnaf_na;

|

||||||

|

struct secp256k1_pippenger_point_state* ps;

|

||||||

|

};

|

||||||

|

|

||||||

|

/*

|

||||||

|

* pippenger_wnaf computes the result of a multi-point multiplication as

|

||||||

|

* follows: The scalars are brought into wnaf with n_wnaf elements each. Then

|

||||||

|

* for every i < n_wnaf, first each point is added to a "bucket" corresponding

|

||||||

|

* to the point's wnaf[i]. Second, the buckets are added together such that

|

||||||

|

* r += 1*bucket[0] + 3*bucket[1] + 5*bucket[2] + ...

|

||||||

|

*/

|

||||||

|

static int secp256k1_ecmult_pippenger_wnaf(secp256k1_gej *buckets, int bucket_window, struct secp256k1_pippenger_state *state, secp256k1_gej *r, secp256k1_scalar *sc, secp256k1_ge *pt, size_t num) {

|

||||||

|

size_t n_wnaf = WNAF_SIZE(bucket_window+1);

|

||||||

|

size_t np;

|

||||||

|

size_t no = 0;

|

||||||

|

int i;

|

||||||

|

int j;

|

||||||

|

|

||||||

|

for (np = 0; np < num; ++np) {

|

||||||

|

if (secp256k1_scalar_is_zero(&sc[np]) || secp256k1_ge_is_infinity(&pt[np])) {

|

||||||

|

continue;

|

||||||

|

}

|

||||||

|

state->ps[no].input_pos = np;

|

||||||

|

state->ps[no].skew_na = secp256k1_wnaf_fixed(&state->wnaf_na[no*n_wnaf], &sc[np], bucket_window+1);

|

||||||

|

no++;

|

||||||

|

}

|

||||||

|

secp256k1_gej_set_infinity(r);

|

||||||

|

|

||||||

|

if (no == 0) {

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

for (i = n_wnaf - 1; i >= 0; i--) {

|

||||||

|

secp256k1_gej running_sum;

|

||||||

|

|

||||||

|

for(j = 0; j < ECMULT_TABLE_SIZE(bucket_window+2); j++) {

|

||||||

|

secp256k1_gej_set_infinity(&buckets[j]);

|

||||||

|

}

|

||||||

|

|

||||||

|

for (np = 0; np < no; ++np) {

|

||||||

|

int n = state->wnaf_na[np*n_wnaf + i];

|

||||||

|

struct secp256k1_pippenger_point_state point_state = state->ps[np];

|

||||||

|

secp256k1_ge tmp;

|

||||||

|

int idx;

|

||||||

|

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

if (i == 0) {

|

||||||

|

/* correct for wnaf skew */

|

||||||

|

int skew = point_state.skew_na;

|

||||||

|

if (skew) {

|

||||||

|

secp256k1_ge_neg(&tmp, &pt[point_state.input_pos]);

|

||||||

|

secp256k1_gej_add_ge_var(&buckets[0], &buckets[0], &tmp, NULL);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

#endif

|

||||||

|

if (n > 0) {

|

||||||

|

idx = (n - 1)/2;

|

||||||

|

secp256k1_gej_add_ge_var(&buckets[idx], &buckets[idx], &pt[point_state.input_pos], NULL);

|

||||||

|

} else if (n < 0) {

|

||||||

|

idx = -(n + 1)/2;

|

||||||

|

secp256k1_ge_neg(&tmp, &pt[point_state.input_pos]);

|

||||||

|

secp256k1_gej_add_ge_var(&buckets[idx], &buckets[idx], &tmp, NULL);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

for(j = 0; j < bucket_window; j++) {

|

||||||

|

secp256k1_gej_double_var(r, r, NULL);

|

||||||

|

}

|

||||||

|

|

||||||

|

secp256k1_gej_set_infinity(&running_sum);

|

||||||

|

/* Accumulate the sum: bucket[0] + 3*bucket[1] + 5*bucket[2] + 7*bucket[3] + ...

|

||||||

|

* = bucket[0] + bucket[1] + bucket[2] + bucket[3] + ...

|

||||||

|

* + 2 * (bucket[1] + 2*bucket[2] + 3*bucket[3] + ...)

|

||||||

|

* using an intermediate running sum:

|

||||||

|

* running_sum = bucket[0] + bucket[1] + bucket[2] + ...

|

||||||

|

*

|

||||||

|

* The doubling is done implicitly by deferring the final window doubling (of 'r').

|

||||||

|

*/

|

||||||

|

for(j = ECMULT_TABLE_SIZE(bucket_window+2) - 1; j > 0; j--) {

|

||||||

|

secp256k1_gej_add_var(&running_sum, &running_sum, &buckets[j], NULL);

|

||||||

|

secp256k1_gej_add_var(r, r, &running_sum, NULL);

|

||||||

|

}

|

||||||

|

|

||||||

|

secp256k1_gej_add_var(&running_sum, &running_sum, &buckets[0], NULL);

|

||||||

|

secp256k1_gej_double_var(r, r, NULL);

|

||||||

|

secp256k1_gej_add_var(r, r, &running_sum, NULL);

|

||||||

|

}

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Returns optimal bucket_window (number of bits of a scalar represented by a

|

||||||

|

* set of buckets) for a given number of points.

|

||||||

|

*/

|

||||||

|

static int secp256k1_pippenger_bucket_window(size_t n) {

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

if (n <= 1) {

|

||||||

|

return 1;

|

||||||

|

} else if (n <= 4) {

|

||||||

|

return 2;

|

||||||

|

} else if (n <= 20) {

|

||||||

|

return 3;

|

||||||

|

} else if (n <= 57) {

|

||||||

|

return 4;

|

||||||

|

} else if (n <= 136) {

|

||||||

|

return 5;

|

||||||

|

} else if (n <= 235) {

|

||||||

|

return 6;

|

||||||

|

} else if (n <= 1260) {

|

||||||

|

return 7;

|

||||||

|

} else if (n <= 4420) {

|

||||||

|

return 9;

|

||||||

|

} else if (n <= 7880) {

|

||||||

|

return 10;

|

||||||

|

} else if (n <= 16050) {

|

||||||

|

return 11;

|

||||||

|

} else {

|

||||||

|

return PIPPENGER_MAX_BUCKET_WINDOW;

|

||||||

|

}

|

||||||

|

#else

|

||||||

|

if (n <= 1) {

|

||||||

|

return 1;

|

||||||

|

} else if (n <= 11) {

|

||||||

|

return 2;

|

||||||

|

} else if (n <= 45) {

|

||||||

|

return 3;

|

||||||

|

} else if (n <= 100) {

|

||||||

|

return 4;

|

||||||

|

} else if (n <= 275) {

|

||||||

|

return 5;

|

||||||

|

} else if (n <= 625) {

|

||||||

|

return 6;

|

||||||

|

} else if (n <= 1850) {

|

||||||

|

return 7;

|

||||||

|

} else if (n <= 3400) {

|

||||||

|

return 8;

|

||||||

|

} else if (n <= 9630) {

|

||||||

|

return 9;

|

||||||

|

} else if (n <= 17900) {

|

||||||

|

return 10;

|

||||||

|

} else if (n <= 32800) {

|

||||||

|

return 11;

|

||||||

|

} else {

|

||||||

|

return PIPPENGER_MAX_BUCKET_WINDOW;

|

||||||

|

}

|

||||||

|

#endif

|

||||||

|

}

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Returns the maximum optimal number of points for a bucket_window.

|

||||||

|

*/

|

||||||

|

static size_t secp256k1_pippenger_bucket_window_inv(int bucket_window) {

|

||||||

|

switch(bucket_window) {

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

case 1: return 1;

|

||||||

|

case 2: return 4;

|

||||||

|

case 3: return 20;

|

||||||

|

case 4: return 57;

|

||||||

|

case 5: return 136;

|

||||||

|

case 6: return 235;

|

||||||

|

case 7: return 1260;

|

||||||

|

case 8: return 1260;

|

||||||

|

case 9: return 4420;

|

||||||

|

case 10: return 7880;

|

||||||

|

case 11: return 16050;

|

||||||

|

case PIPPENGER_MAX_BUCKET_WINDOW: return SIZE_MAX;

|

||||||

|

#else

|

||||||

|

case 1: return 1;

|

||||||

|

case 2: return 11;

|

||||||

|

case 3: return 45;

|

||||||

|

case 4: return 100;

|

||||||

|

case 5: return 275;

|

||||||

|

case 6: return 625;

|

||||||

|

case 7: return 1850;

|

||||||

|

case 8: return 3400;

|

||||||

|

case 9: return 9630;

|

||||||

|

case 10: return 17900;

|

||||||

|

case 11: return 32800;

|

||||||

|

case PIPPENGER_MAX_BUCKET_WINDOW: return SIZE_MAX;

|

||||||

|

#endif

|

||||||

|

}

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

SECP256K1_INLINE static void secp256k1_ecmult_endo_split(secp256k1_scalar *s1, secp256k1_scalar *s2, secp256k1_ge *p1, secp256k1_ge *p2) {

|

||||||

|

secp256k1_scalar tmp = *s1;

|

||||||

|

secp256k1_scalar_split_lambda(s1, s2, &tmp);

|

||||||

|

secp256k1_ge_mul_lambda(p2, p1);

|

||||||

|

|

||||||

|

if (secp256k1_scalar_is_high(s1)) {

|

||||||

|

secp256k1_scalar_negate(s1, s1);

|

||||||

|

secp256k1_ge_neg(p1, p1);

|

||||||

|

}

|

||||||

|

if (secp256k1_scalar_is_high(s2)) {

|

||||||

|

secp256k1_scalar_negate(s2, s2);

|

||||||

|

secp256k1_ge_neg(p2, p2);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

#endif

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Returns the scratch size required for a given number of points (excluding

|

||||||

|

* base point G) without considering alignment.

|

||||||

|

*/

|

||||||

|

static size_t secp256k1_pippenger_scratch_size(size_t n_points, int bucket_window) {

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

size_t entries = 2*n_points + 2;

|

||||||

|

#else

|

||||||

|

size_t entries = n_points + 1;

|

||||||

|

#endif

|

||||||

|

size_t entry_size = sizeof(secp256k1_ge) + sizeof(secp256k1_scalar) + sizeof(struct secp256k1_pippenger_point_state) + (WNAF_SIZE(bucket_window+1)+1)*sizeof(int);

|

||||||

|

return ((1<<bucket_window) * sizeof(secp256k1_gej) + sizeof(struct secp256k1_pippenger_state) + entries * entry_size);

|

||||||

|

}

|

||||||

|

|

||||||

|

static int secp256k1_ecmult_pippenger_batch(const secp256k1_ecmult_context *ctx, secp256k1_scratch *scratch, secp256k1_gej *r, const secp256k1_scalar *inp_g_sc, secp256k1_ecmult_multi_callback cb, void *cbdata, size_t n_points, size_t cb_offset) {

|

||||||

|

/* Use 2(n+1) with the endomorphism, n+1 without, when calculating batch

|

||||||

|

* sizes. The reason for +1 is that we add the G scalar to the list of

|

||||||

|

* other scalars. */

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

size_t entries = 2*n_points + 2;

|

||||||

|

#else

|

||||||

|

size_t entries = n_points + 1;

|

||||||

|

#endif

|

||||||

|

secp256k1_ge *points;

|

||||||

|

secp256k1_scalar *scalars;

|

||||||

|

secp256k1_gej *buckets;

|

||||||

|

struct secp256k1_pippenger_state *state_space;

|

||||||

|

size_t idx = 0;

|

||||||

|

size_t point_idx = 0;

|

||||||

|

int i, j;

|

||||||

|

int bucket_window;

|

||||||

|

|

||||||

|

(void)ctx;

|

||||||

|

secp256k1_gej_set_infinity(r);

|

||||||

|

if (inp_g_sc == NULL && n_points == 0) {

|

||||||

|

return 1;

|

||||||

|

}

|

||||||

|

|

||||||

|

bucket_window = secp256k1_pippenger_bucket_window(n_points);

|

||||||

|

if (!secp256k1_scratch_resize(scratch, secp256k1_pippenger_scratch_size(n_points, bucket_window), PIPPENGER_SCRATCH_OBJECTS)) {

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

secp256k1_scratch_reset(scratch);

|

||||||

|

points = (secp256k1_ge *) secp256k1_scratch_alloc(scratch, entries * sizeof(*points));

|

||||||

|

scalars = (secp256k1_scalar *) secp256k1_scratch_alloc(scratch, entries * sizeof(*scalars));

|

||||||

|

state_space = (struct secp256k1_pippenger_state *) secp256k1_scratch_alloc(scratch, sizeof(*state_space));

|

||||||

|

state_space->ps = (struct secp256k1_pippenger_point_state *) secp256k1_scratch_alloc(scratch, entries * sizeof(*state_space->ps));

|

||||||

|

state_space->wnaf_na = (int *) secp256k1_scratch_alloc(scratch, entries*(WNAF_SIZE(bucket_window+1)) * sizeof(int));

|

||||||

|

buckets = (secp256k1_gej *) secp256k1_scratch_alloc(scratch, (1<<bucket_window) * sizeof(*buckets));

|

||||||

|

|

||||||

|

if (inp_g_sc != NULL) {

|

||||||

|

scalars[0] = *inp_g_sc;

|

||||||

|

points[0] = secp256k1_ge_const_g;

|

||||||

|

idx++;

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

secp256k1_ecmult_endo_split(&scalars[0], &scalars[1], &points[0], &points[1]);

|

||||||

|

idx++;

|

||||||

|

#endif

|

||||||

|

}

|

||||||

|

|

||||||

|

while (point_idx < n_points) {

|

||||||

|

if (!cb(&scalars[idx], &points[idx], point_idx + cb_offset, cbdata)) {

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

idx++;

|

||||||

|

#ifdef USE_ENDOMORPHISM

|

||||||

|

secp256k1_ecmult_endo_split(&scalars[idx - 1], &scalars[idx], &points[idx - 1], &points[idx]);

|

||||||

|

idx++;

|

||||||

|

#endif

|

||||||

|

point_idx++;

|

||||||

|

}

|

||||||

|

|

||||||

|

secp256k1_ecmult_pippenger_wnaf(buckets, bucket_window, state_space, r, scalars, points, idx);

|

||||||

|

|

||||||

|

/* Clear data */

|

||||||

|

for(i = 0; (size_t)i < idx; i++) {

|

||||||

|

secp256k1_scalar_clear(&scalars[i]);

|

||||||

|

state_space->ps[i].skew_na = 0;

|

||||||

|

for(j = 0; j < WNAF_SIZE(bucket_window+1); j++) {

|

||||||

|